Over the last 3-6 months, I’ve had a ton of conversations around Secrets and Non-Human Identities (NHI), governance, authentication, and authorization with many of them leading to the inevitable AI overlay of the same topic. Now, I say it that way because we’re getting more mature when it comes to building and securing interactive and process driven identity and security, but AI and agentic infrastructure is changing the paradigm. In a sense, we could always trust (no pun intended to my Zero Trust friends) people, or processes, would operate within the bounds of the limitations and protections we put in place (hacking / vulnerabilities aside, but still to a certain limit). But, in an agentic world, we are essentially releasing a bunch of uncontrolled, non-interactive, processes that don’t know or understand those bounds, are intended to go around or discover other bounds, and, in a lot of use cases, are operating with our consent or on our behalf. In the rush to realize the value of AI, we’ve given it access and now need build protections in place to enforce guardrails and limits.

Like most of us, I heard this from conversations and took to the books, papers, peers, and industry to see what controls, recommendations, and Best Practices we’re developing in this space. Along with this, I also looked at the vendors and tools that are being released and how they align with current Identity and Security infrastructure and user practices. What I found is we’re developing content, protocols, and recommendations at lightning speed given how this is all moving. All this is coming down to some key principles and guidance:

- Develop policies and governance for AI usage: What is the intent, how must it be secured, how do we respond, how do we audit and control.

- Build governance programs, principals, etc. for AI: Based on policy, intended use, models, how are we managing usage, access, data, features and functionality, etc.

- Security and controls implementation: Based on policy, governance, and organizational requirements, how are we protecting users, data, and agents based on organizational capabilities.

- Monitoring and auditing capabilities: How are we building infrastructure, signals, etc. to know how AI is being used, what it is deriving, accessing, etc.

- Future proofing and moving with capabilities: While probably should be part of the organizational governance, how are we building to support the next big model, capability, usage, etc.

Me being a bit of a nerd and wanting to understand the infrastructure behind all of this, I focused my attention on #3 and #5 from the key principles. What I found was a mix of implementations, integrations, guidance, and products that fit point needs and solutions, but not a lot in terms of large-scale implementation and architectural patterns. There is plenty if you just focus on IAM or just AI, but not a lot that builds to support both. At a conference a few weeks back, I had an opportunity to talk with someone that is building out pieces of this now and he had a similar view in that we have a lot of technology, but not lot of proven patterns that meet the entirety of the needs for a modern organization.

Enter my crazy stubborn brain and wanting to build all this out!

Building the Proving Grounds

I set out to take the core principles for IAM (Governance, Access, and Privilege) and build out a mock enterprise layout that has the modern infrastructure in place and can account for agentic use cases. To make this more of a formal process, I built out a roadmap of what I am trying to achieve with recommended architecture, interactive flows, etc.

To start, I put together a simple execution roadmap for my plan (see below). Pretty standard with any program I have built over the years but required to make sure I am aligned with what I am trying to achieve.

- Define the problem: How do we take all the existing identity and security guidance, tools, and principals and apply them to agentic use cases. This must be secure, integrate across use cases, and be usable by users and processes.

- Architecture: Build out a pattern of components, interactions, dependencies, and flows on how this all will work.

- With this, I also built out an option for myself given am mostly working on open source or services to be able to build this to validate.

- Build / Integrate: Given the architecture, build out a demo environment that handles normal user activity and use cases, but also can handle agentic integrations and delegations.

- Test / Validate: Once is built, build out demos and validate how all this works and show the interactions.

- Publish: I plan to document this journey and disseminate my findings as I go so people can leverage in their own journeys in this space.

Architecture

When I started researching this and putting things together, I found a mixed bag of architectures and patterns. To add to the challenge, products, integrations, and guidance are coming almost daily on ways to handle different use cases which each come with their own set of recommendations. All this is leading to point solutions to manage and secure specific use cases.

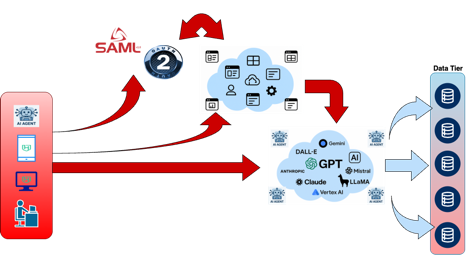

The early designs and patterns follow what you would probably see as a standard operating model for Identity Governance and Access Management use cases (see below). This largely focused on presenting AI applications and access using standard authentication and Identity Governance concepts. This is then handed off to static secrets, keys, etc. which are intended to grant ‘someone’ access to a model, agent, etc.

This is leading to the explosion of secrets in our environments and gives little to no control over inputs and outputs when it comes to models, data, etc.

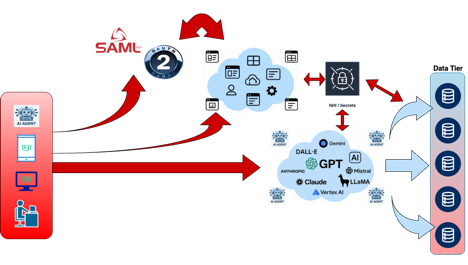

This, along with the explosion of cloud and automated processes, lead to the wonderful work and products coming out of the Non-Human Identity (NHI) space. Its awesome that we formally have an OWASP Top 10 now for NHI risks (see https://owasp.org/www-project-non-human-identities-top-10/2025/top-10-2025/). With this technology, and guidance coming mainstream, we’ve addressed a critical need in the agentic architecture in that we now have secrets discovery, lifecycle, rotation, and management (see below). This addresses the need to manage the secrets used by applications, agents, etc. to use models and access data.

With secrets and other non-human identities being managed, we still have a lot of static credentials being used that may or may not tie directly to a user. Additionally, we don’t have a good way to control and put policy around agent and application access to models and data.

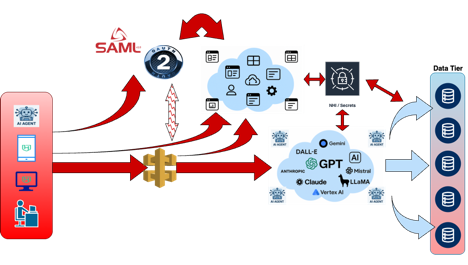

To start building guardrails and enforce access to models and data, we have the rise of AI gateways. Existing API management technology has been extended with new protocols to allow for enforcing policy like data access and authentication (see below). This is allowing for the start of governing AI and model access.

Now, we have secure secrets with lifecycle, user authentication and enforcement, policy enforcement for models and access. But the main limitation of this is we still are using a lot of static credentials, governed by our Secrets / NHI management, for protecting our AI and agentic use cases. This is leading to the explosion of secrets in our environments and passing around static, but managed, credentials. Also, in some use cases (I fear this happens more than we would like), we are giving our (human issued / verified) tokens to agents which are acting on them.

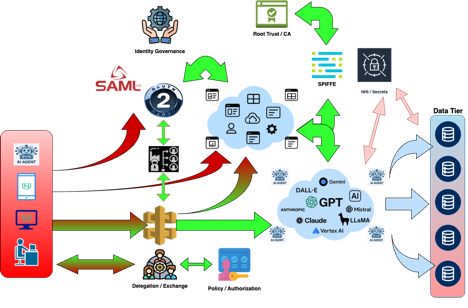

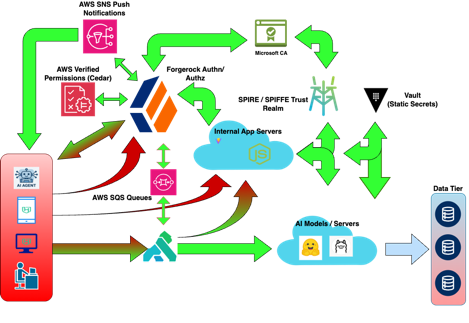

This is where we start bringing everything together. Using existing and some new protocols, we are seeing the emergence of things like SPIFFE, token exchanges, and dynamic authorization allowing for users to delegate credentials to agents acting on their behalf, establishing trusted workload tokens for ephemeral processes and agents, etc. (see below).

This is the model we want to get to in our environments. The keys to this agentic architecture are user and workload based, delegated, and contextual based on authorized use cases and consents.

The challenge is, this is not a single ‘product’ to build all this out and requires multiple integrated products to provide a full solution. And, from the research I have done, there isn’t a single product or solution out there that has this documented to provide enterprise guidance.

This is my challenge and goal with this series of papers. How do we take this secure, scalable, contextual architecture with all the AI governance recommendations, and build out an architectural pattern? This must include products and configurations to provide an integrated solution people can use.

The Solution

Given the problem and proposed architecture, I am setting out to build this out using the tools available to me. I’m. doing this largely using open-source tooling given is a private lab but do have some commercial products (more community editions of products) I have access to through relationships and partnerships.

The full architecture for the build out is provided below.

In this solution, I’ll be using:

- AI Models / Servers: Have Ollama and Stable-Diffusion model servers setup to allow for API calls and direct web access for agents and applications to simulate an enterprise use case.

- Applications: I have a demo React/Vite with NodeJS application to simulate AI model calls and some other demo services.

- Secrets Management: I am using Hashicorp Vault to provide static secrets management to applications, clients, and services (only where needed).

- SPIRE / SPIFFE: For workload clients and applications, they will request workload SVIDs which can be exchanged in the authentication infrastructure for access tokens for target services.

- Central Certificate Authority: To establish common trust for token and SVIDs, I’ll be issuing certificates and tokens signed by a common root authority which is my internal Microsoft Windows CA.

- Authentication and Delegation: ForgeRock will be the central authentication and authorization service for the environment. This will provide the token issuance, exchange, and authorization for access to services by users, agents, and workloads.

- Dynamic Authorization: I’m using policy sets in ForgeRock populated by authorization decisions from AWS Verified Permissions since is scalable policies across different components.

- Push Notifications: For things like phishing resistant MFA, I’ll be using ForgeRock authenticator with AWS SNS to MFA users when authenticating or delegating to an agent on their behalf.

- AWS SQS: since agents may have access to services on behalf of users, we need a mechanism to publishing signals using CAEP / SSF to publish token revoke and other updates in the event we need to disable or revoke access.

- AI Gateway: As a central policy enforcement point, we’ll be using Kong gateway to publishing services and do policy and token checking against issued tokens in the environment.

Sequence Diagrams / Use Case

With the planned solution in place, is important to document the intended interactive sequences for the different use cases. The different sequences covered by this include:

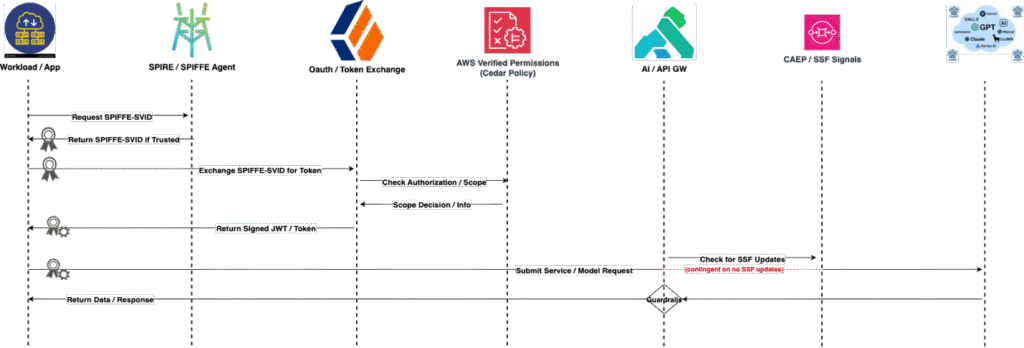

- Service-to-Service: When services are connecting to each other, rather than using standard client credentials grant, how do they securely authenticate and get a token in the environment.

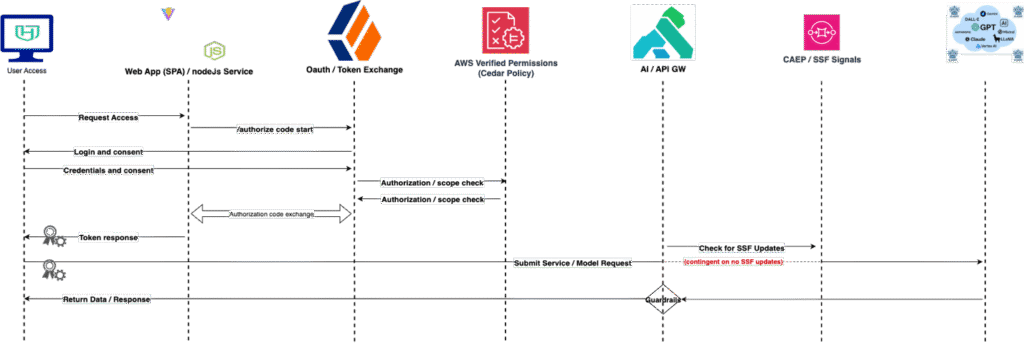

- User-to-service: When logging into the target application, when running an AI query or use case, how do you hand off from application to target model service.

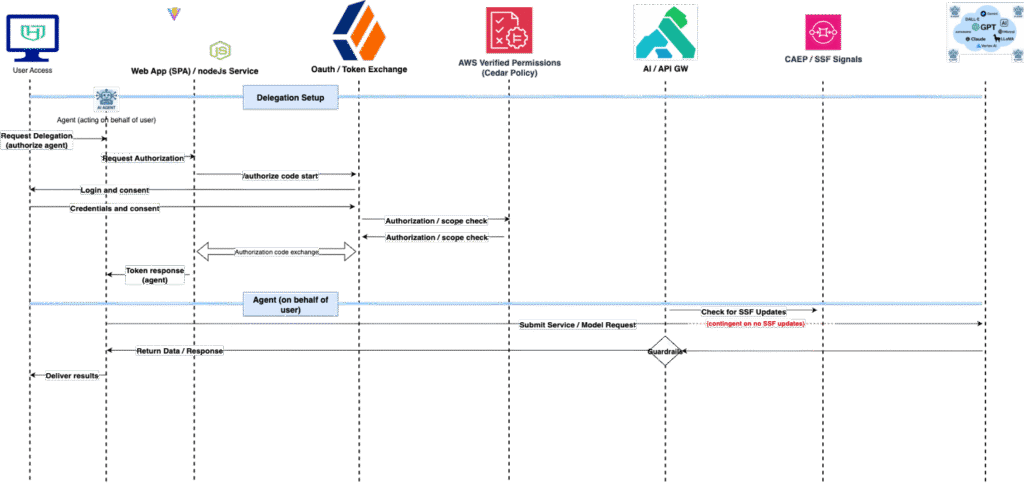

- User-to-agent-to-service: When an agent is acting on behalf of a user, how does a user delegate a token to the agent to complete a task and be able to report results back to the user.

Next Steps

I’m building this as a model for others to use in their agentic journeys. So, in that spirit, I’ll be adding blogs and publishing some GitHub repos for people to use during this process. My goal here is to show the full pattern of how organizations can leverage the tools available, integrate them, and build out secure scalable use cases.

If you have questions on the above, reach out and would love to collaborate with people on building this out.

Either way, follow along as I build this out and we can all leverage this going forward in securing our environments going forward.

One Response

Nick: This is excellent work – thoughtful, timely and really well-structured. There’s a lot of things to consider going forward and I am glad to see you out in front of it.